DIRAC

Description¶

The DIRAC program computes molecular properties using relativistic quantum chemical methods. It is named after P.A.M. Dirac, the father of relativistic electronic structure theory.

Versions¶

Following versions of DIRAC program are currently available:

- Runtime dependencies:

- foss/2021a

- Python/3.9.5-GCCcore-10.3.0

- HDF5/1.10.7-gompi-2021a

You can load selected version (also with runtime dependencies) as a one module by following command:

module load DIRAC/22.0-foss-2021a

User guide¶

You can find the software documentation and user guide on the official DIRAC website.

Benchmarking DIRAC¶

In order to better understand how DIRAC utilises the available hardware on Devana and how to get good performance we can examine the effect on benchmark performance of the choice of the number of MPI ranks and OMP threads per node.

Following command has been used to run the benchmarks:

export OMP_NUM_THREADS=$ntomp

pam-dirac --mpi=$ntmpi --ag=$mem_cpu --scratch=$dirac_dir --inp=$benchmark.inp --mol=$benchmark.mol

echo "scale=0; ($SLURM_MEM_PER_NODE / (64 * $ntomp )" | bc. Temporary working dirac_dir directory was set to /scratch.

Benchmarks have been made on following systems:

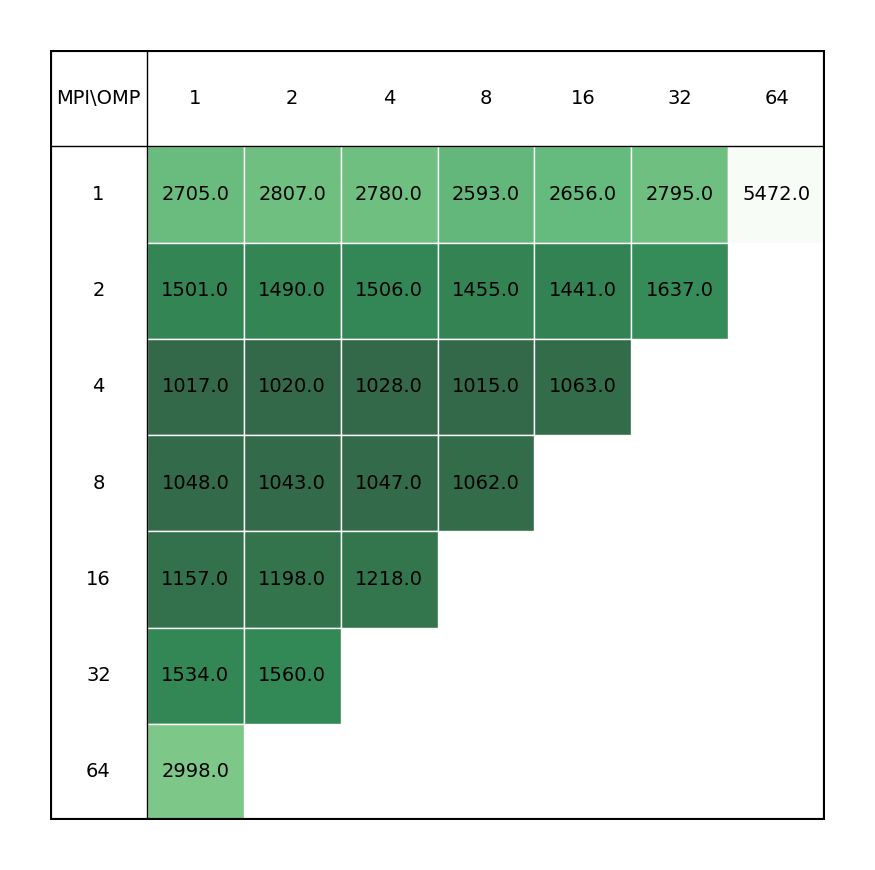

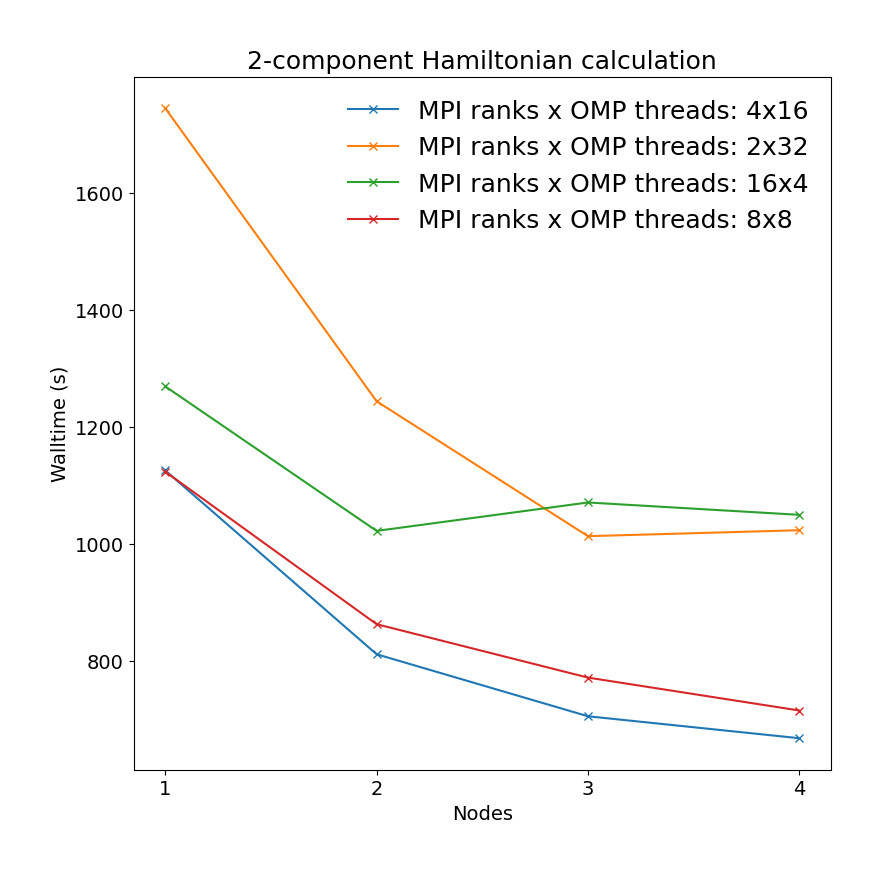

SCF calculation of PbF molecule utilizing 2-component X2C Hamiltonian.

| Single node Performance | Cross-node Performance |

|---|---|

|

|

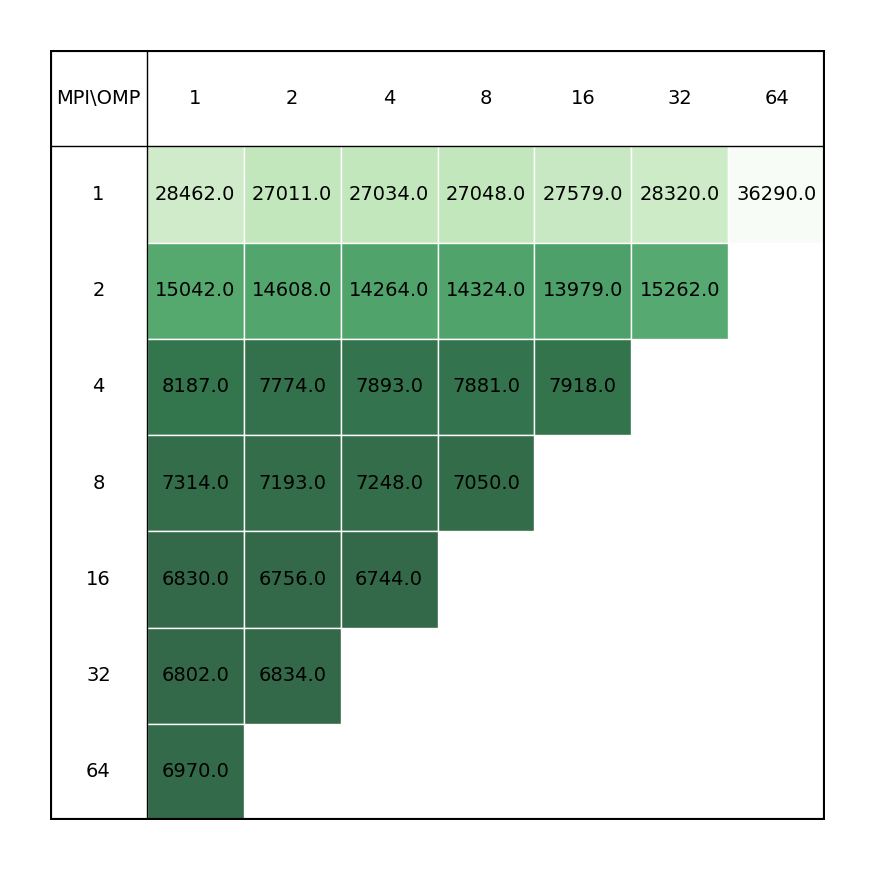

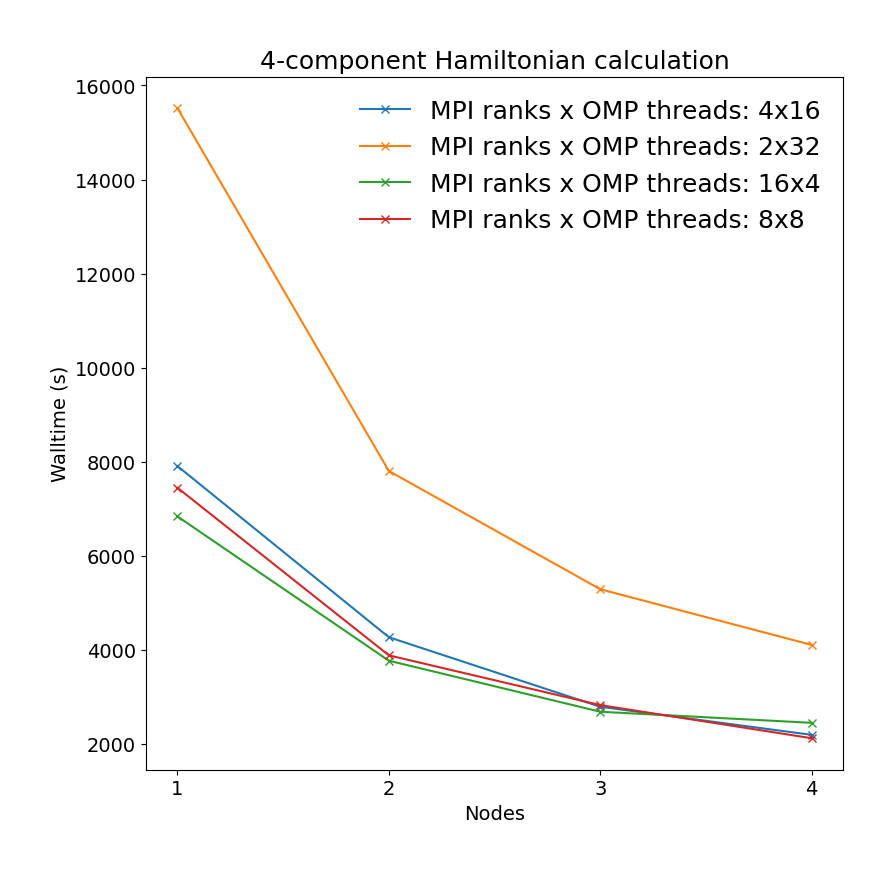

SCF calculation utilizing for PbF molecule 4-component Dirac-Coulomb Hamiltonian (DOSSSS) in conjuction GAUNT correction to the two electron interaction"

| Single node Performance | Cross-node Performance |

|---|---|

|

|

Example run script¶

You can copy and modify this script to dirac_run.sh and submit job to a compute node by command sbatch dirac_run.sh.

#!/bin/bash

#SBATCH -J "dirac_job" # name of job in SLURM

#SBATCH --partition=ncpu # selected partition, short, medium or long

#SBATCH --nodes=1 # number of used nodes

#SBATCH --ntasks= # number of mpi tasks (parallel run)

#SBATCH --cpus-per-task= # number of cpus per mpi task (parallel run)

#SBATCH --time=72:00:00 # time limit for a job

#SBATCH -o stdout.%J.out # standard output

#SBATCH -e stderr.%J.out # error output

#SBATCH --exclusive # exclusive run on node

module load DIRAC/22.0-foss-2021a

INPUT=dirac.inp # input file

MOL=dirac.xyz # xyz or mol file

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

pam-dirac --mpi=$SLURM_NTASKS --inp=$INPUT --scratch=/scratch/$USER/DIRAC/$SLURM_JOB_ID --mol=$MOL