Storage Hardware overview¶

Once you connect to HPC DEVANA cluster you have direct access to two different storage locations from the Login nodes, and one that is accessible only when your job is running. Direct access from the Login nodes is available to /home, /projects and /scratch storage directories. The /work directory is the local storage of the computing nodes and can only be accessed when there is a job running.

Home¶

The /home storage directory location is where you are placed after logging in, and it's where your user directory is located. Currently, a user is limited to storing up to 1 TB of data in total in their /home. For more information about home quotas, please refer to the home quotas section. When project expires, the data in HOME directory will be stored for 3 months.

We do not provide data backup services for the any directory (/home, /projects,/scratch, /work)

| Access | Mountpoint | Limitations per user | Backup | Net Capacity | Throughput | Protocol |

|---|---|---|---|---|---|---|

| Login and Compute nodes | /home | 1TB | no | 547 TB | write 3 GB/s and read 6 GB/s | NFS |

Configuration of the HOME storage:

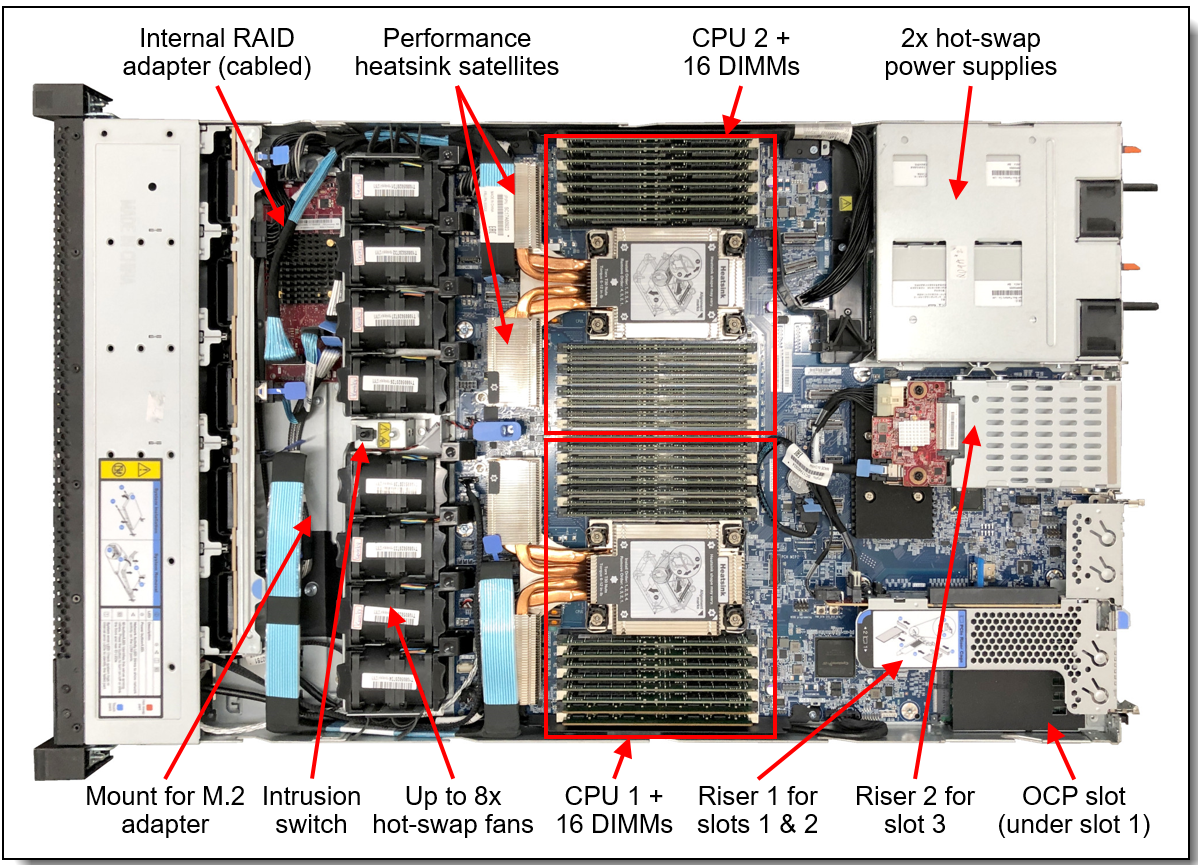

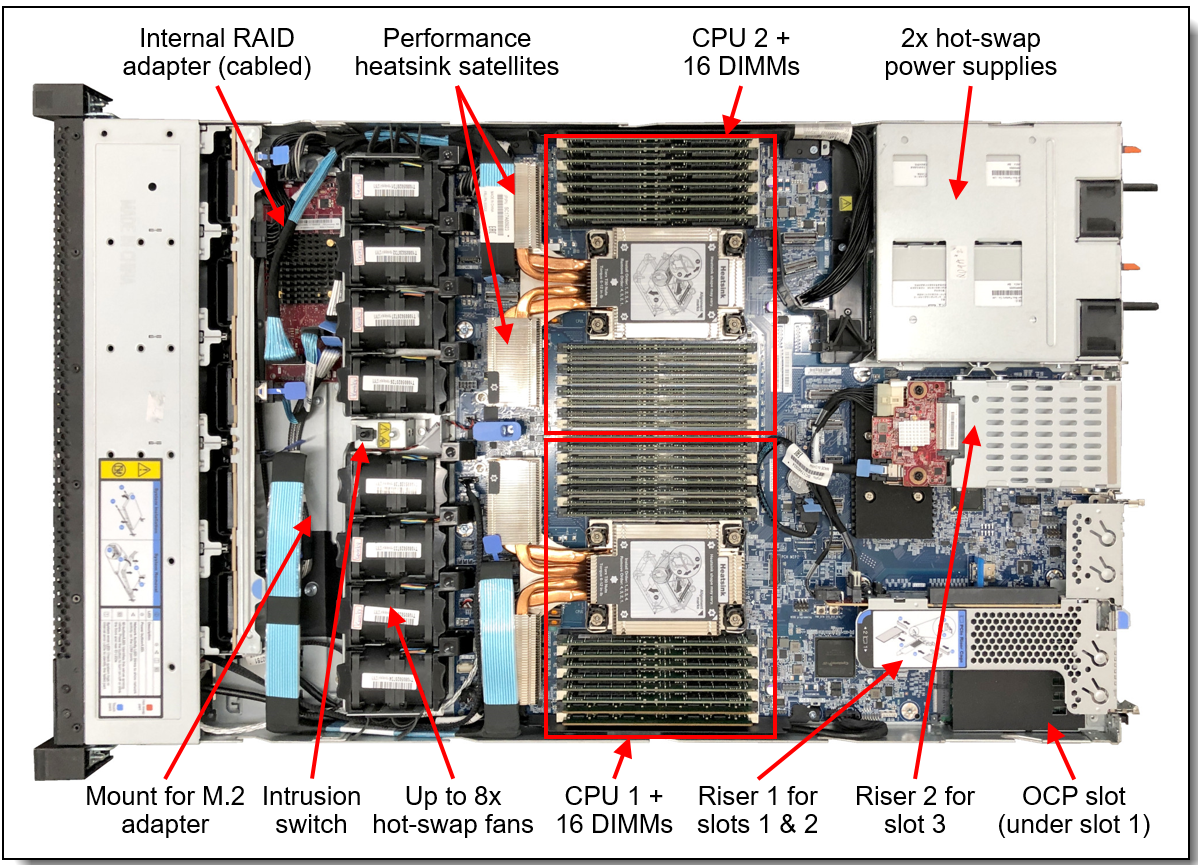

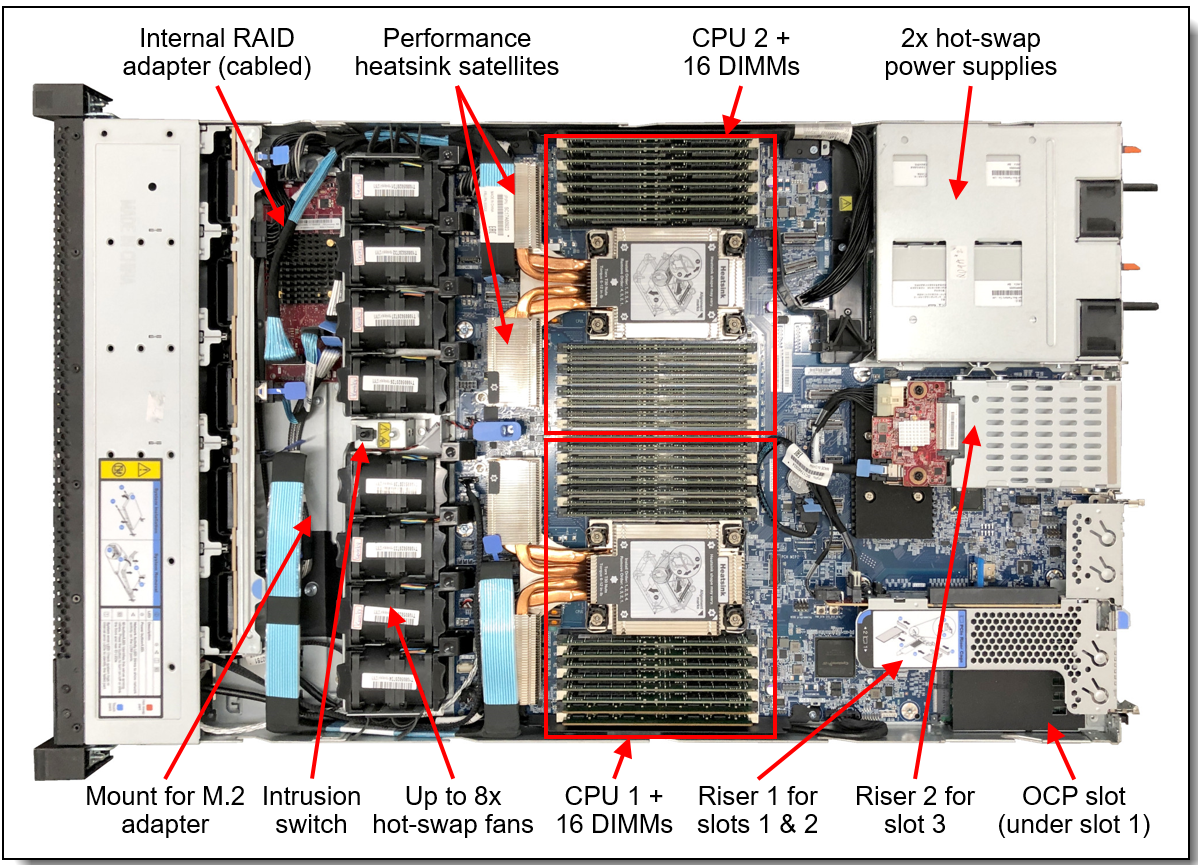

2x ThinkSystem SR630 V2(1U):

- 2x 3rd Gen. Intel® Xeon® Scalable Processors Gold 6338: 2.00GHz/32-core/205W

- 16x 16GB DDR4-3200MHz 8 ECC bits

- 2x 480GB M.2 SATA SSD (HW RAID 1)

- 2x InfiniBand ThinkSystem Mellanox ConnectX-6 HDR/200GbE, QSFP56 1-port, PCIe4

- 1x Ethernet adapter ConnectX-6 10/25 Gb/s Dual-port SFP28, PCIe4 x16, MCX631432AN-ADAB

- 2x ThinkSystem 430-16e SAS/SATA 12Gb HBA

- 2x 1100W Power Supply

- OS CentOS Linux 7

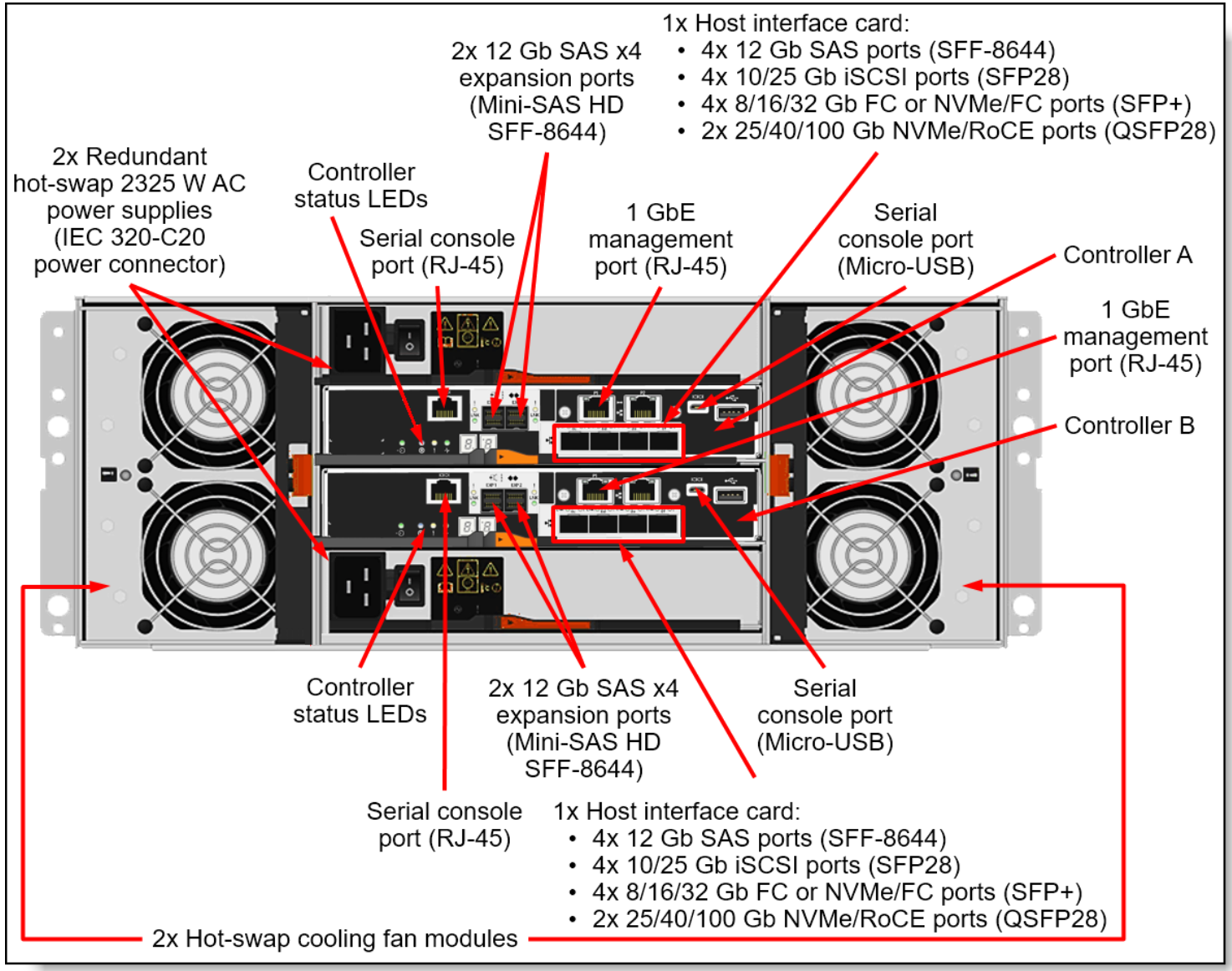

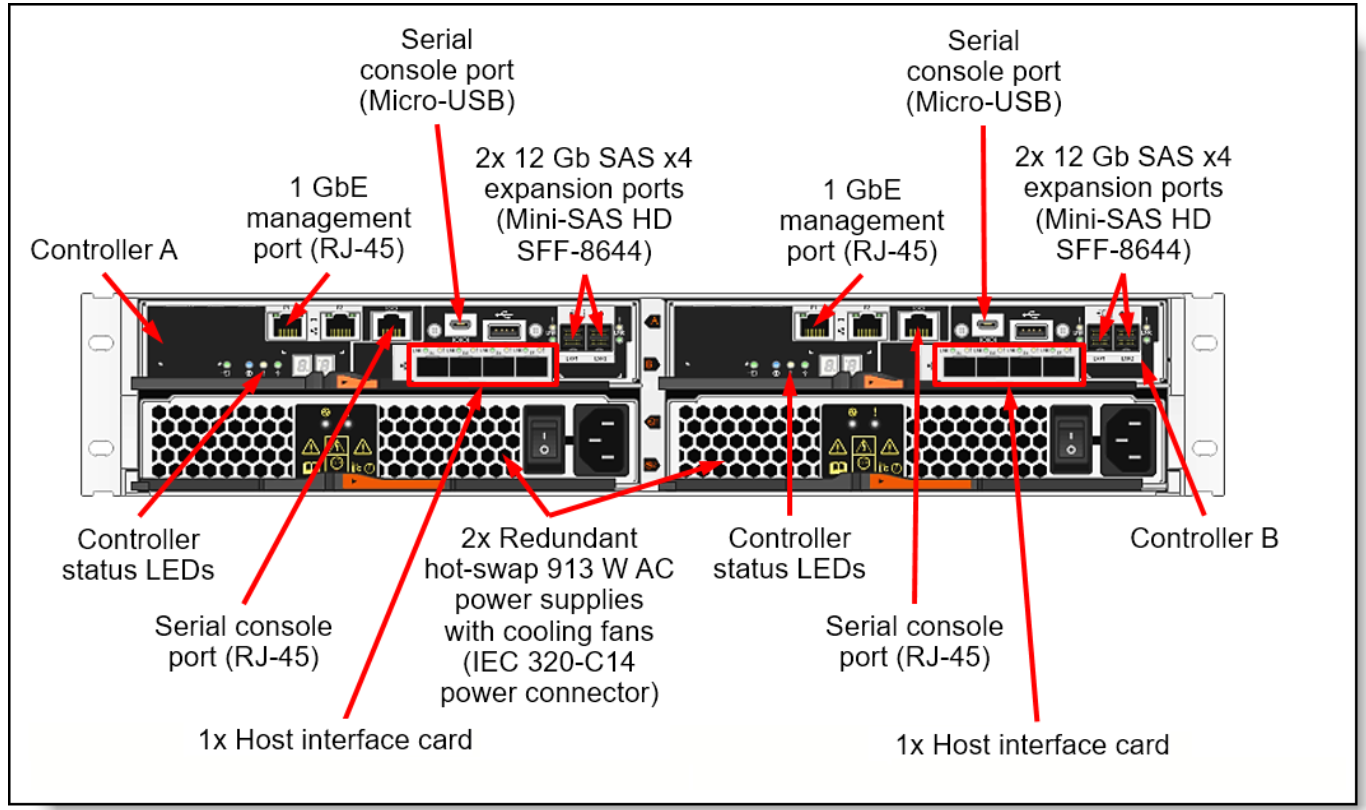

1x DE6000H 4U60 Controller SAS:

- 32GB controller cache

- 100x 3,5" 8TB 7200RPM 256MB SAS 12Gb/s

- RAID level 6

- 8x 12 Gb SAS host ports

- 1x 1 GbE port (UTP, RJ-45) per controller for out-of-band management

Projects¶

A /projects directory is created for every active project and is accessible from Login and Compute nodes at mountpoints /projects. Currently, there are no data limits (quotas). The /projects storage is not intended for computing. Data stored in the project directory will be available for 3 months after the project's conclusion. To display the number of your project's directory on the /projects mountpoint, use the id command. The project number (187000062(p70-23-t)) is shown at the very end of the output.

mblasko@login01 ~ > id

uid=187000083(mblasko) gid=187000083(mblasko) groups=187000083(mblasko),187000062(p70-23-t)

| Access | Mountpoint | Limitations per user | Backup | Net Capacity | Throughput | Protocol |

|---|---|---|---|---|---|---|

| Login and Compute nodes | /projects | none | no | 269 TB | XXX GB/s | NFS |

Configuration of the PROJECTS storage:

2x ThinkSystem SR630 V2(1U):

- 2x 3rd Gen. Intel® Xeon® Scalable Processors Gold 6338: 2.00GHz/32-core/205W

- 16x 16GB DDR4-3200MHz 8 ECC bits

- 2x 480GB M.2 SATA SSD (HW RAID 1)

- 2x InfiniBand ThinkSystem Mellanox ConnectX-6 HDR/200GbE, QSFP56 1-port, PCIe4

- 1x Ethernet adapter ConnectX-6 10/25 Gb/s Dual-port SFP28, PCIe4 x16, MCX631432AN-ADAB

- 2x ThinkSystem 430-16e SAS/SATA 12Gb HBA

- 2x 1100W Power Supply

- OS CentOS Linux 7

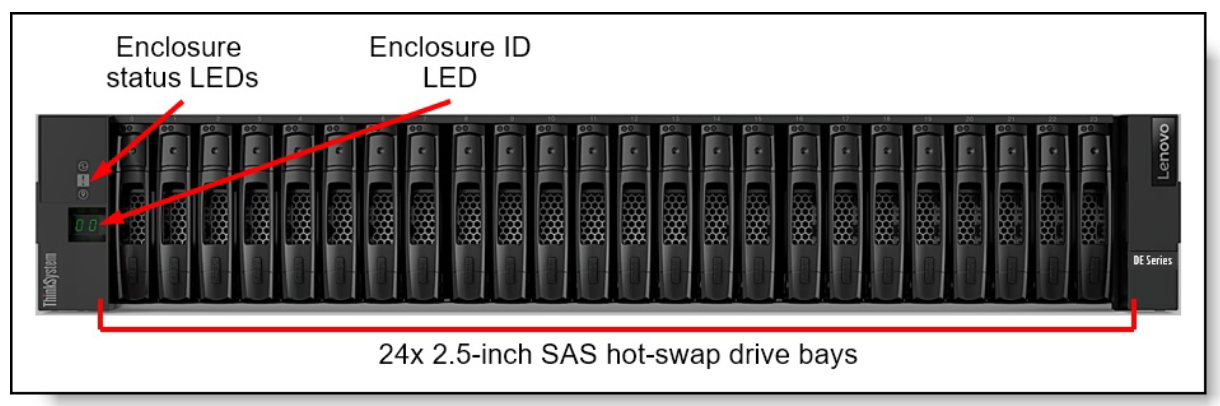

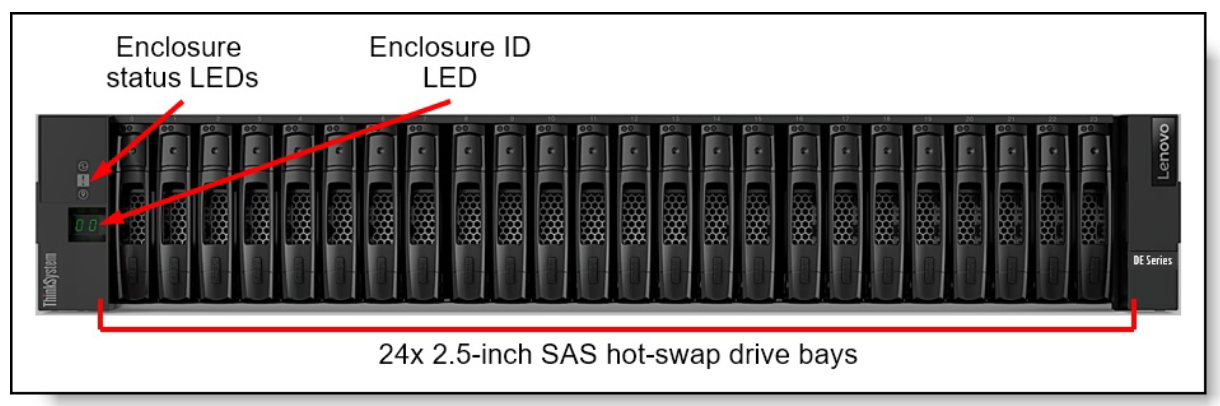

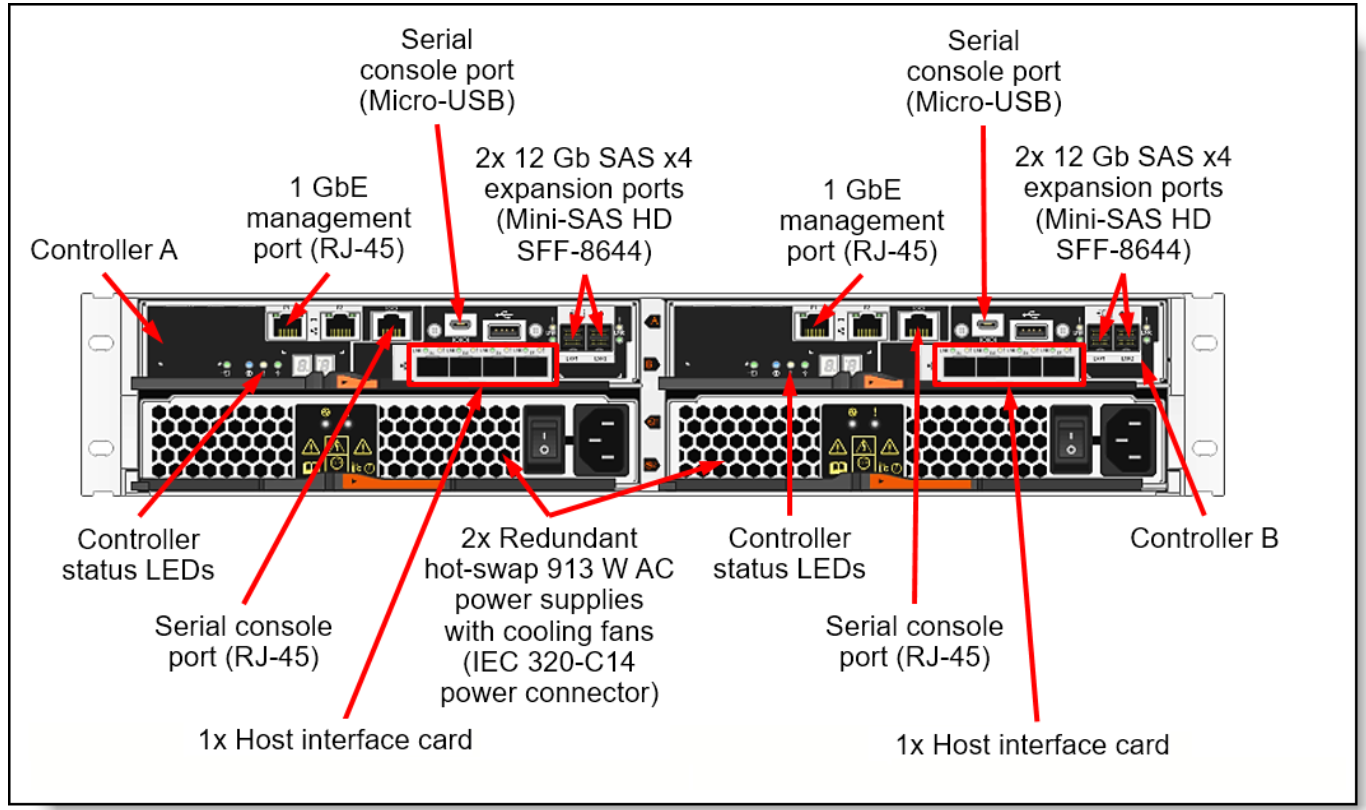

1x DE4000F 2U24 SFF All Flash Storage Array:

- 64GB controller cache

- 24x 2,5" 15.36TB PCIe 4.0 x4/dual port x2

- RAID level 6

- 8x 12 Gb SAS host ports (Mini-SAS HD, SFF-8644) (4 ports per controller)

- 8x 10/25 Gb iSCSI SFP28 host ports (DAC or SW fiber optics [LC]) (4 ports per controller)

- 8x 8/16/32 Gb FC SFP+ host ports (SW fiber optics [LC]) (4 ports per controller

- 1x 1 GbE port (UTP, RJ-45) per controller for out-of-band management

Scratch¶

The Scratch directory is designed for temporary data generated during computations. All tasks with heavy I/O requirements should use the /scratch directories as their working directory, or alternatively, use local disk storage located on each compute node under the /work mountpoint. In this context, users are required to transfer essential data from the /scratch directory to their /home or /projects directories after completing their computations and to remove temporary files. The /scratch directory is implemented as a parallel BeeGFS filesystem, accessible through the 100Gb/s Infiniband network, and it can be accessed from all login and compute nodes. Accessible capacity is 269 TB, shared among all users. There are no quotas for project directories located on the /scratch mountpoint.

| Access | Mountpoint | Limitations per user | Backup | Net Capacity | Throughput | Protocol |

|---|---|---|---|---|---|---|

| Login and Compute nodes | /scratch | none | no | 269 TB | XXX GB/s | BeeGFS |

Configuration of the SCRATCH storage:

2x ThinkSystem SR630 V2(1U):

- 2x 3rd Gen. Intel® Xeon® Scalable Processors Gold 6338: 2.00GHz/32-core/205W

- 16x 16GB DDR4-3200MHz 8 ECC bits

- 2x 480GB M.2 SATA SSD (HW RAID 1)

- 2x InfiniBand ThinkSystem Mellanox ConnectX-6 HDR/200GbE, QSFP56 1-port, PCIe4

- 1x Ethernet adapter ConnectX-6 10/25 Gb/s Dual-port SFP28, PCIe4 x16, MCX631432AN-ADAB

- 2x ThinkSystem 430-16e SAS/SATA 12Gb HBA

- 2x 1100W Power Supply

- OS CentOS Linux 7

1x DE4000F 2U24 SFF All Flash Storage Array:

- 64GB controller cache

- 24x 2,5" 15.36TB PCIe 4.0 x4/dual port x2

- RAID level 5

- 8x 12 Gb SAS host ports (Mini-SAS HD, SFF-8644) (4 ports per controller)

- 8x 10/25 Gb iSCSI SFP28 host ports (DAC or SW fiber optics [LC]) (4 ports per controller)

- 8x 8/16/32 Gb FC SFP+ host ports (SW fiber optics [LC]) (4 ports per controller

- 1x 1 GbE port (UTP, RJ-45) per controller for out-of-band management

Work¶

Like a scratch directory, the /work directory serves as temporary data storage during computations, with the requirement that data must be moved and deleted once the job is completed. The /work directory represents the local storage on the computing node and is accessible only during an active job. The capacity of the /work directory depends on the node number. Nodes numbered 001 - 049 and 141-146 have a 3.5TB /work capacity, while the remaining nodes have a 1.8TB capacity. There are no user or project quotas on the /work mountpoint.

| Access | Mountpoint | Limitations per user | Backup | Net Capacity | Throughput | Protocol |

|---|---|---|---|---|---|---|

| Compute nodes | /work | none | no | 1.5/3.5 TB | XXX GB/s | XFS |

The technical specifications of individual computing nodes can be found in the following table. For more detailed information about the compute nodes, please refer to the compute nodes section.

| Feature | n[001-048] | n[049-140] | |

|---|---|---|---|

| Processor | 2x Intel Xeon Gold 6338 CPU @ 2.00 GHz | | |

| RAM | 256 GB DDR4 RAM @ 3200 MHz | | |

| Disk | 3.84 TB NVMe SSD @ 5.8 GB/s , 362 kIOPs | 1.92 TB NVMe SSD @ 2.3 GB/s, 166 kIOPs | |

| Network | 100Gb/s HDR Infiniband | | |