Directory Structure

Available filesystems¶

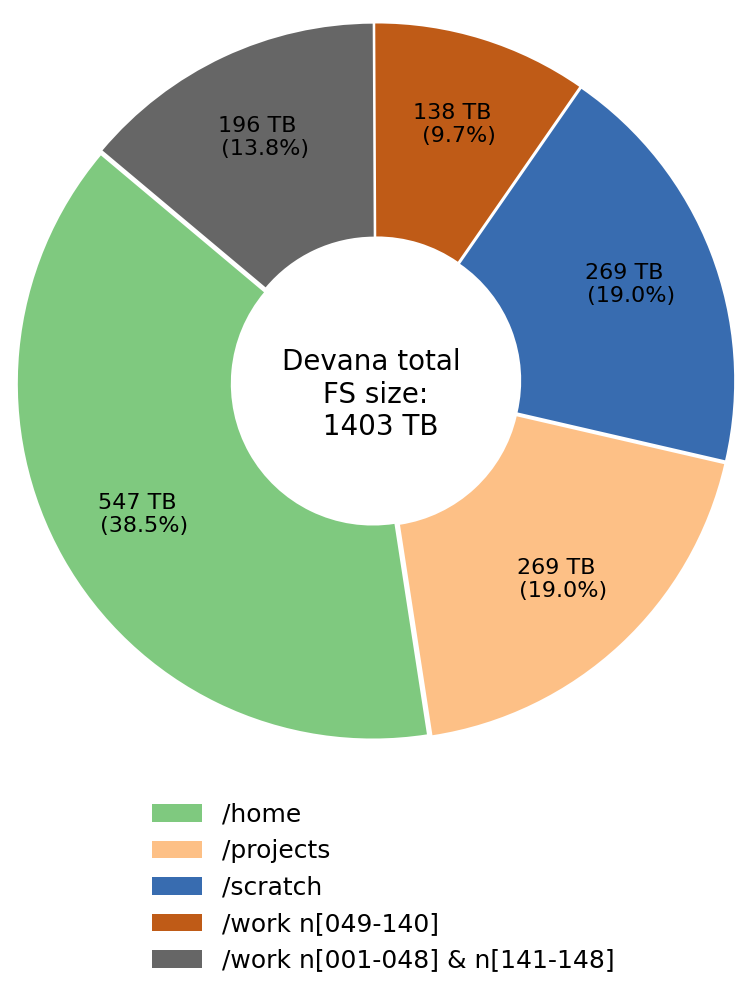

Currently available filesystems on Devana supercomputer.

- /home

- personal home directory, unique for each user

- used for storing personal results

- /projects

- shared project diretory, accessible to all project participants

- used for storing project results

- /scratch

- shared directory for large files, accessible to all project participants

- used during calculations for files whose size exceeds capacity of local disks

- /work

- local storage on each compute and gpu node

- used during calculations for files whose size does not exceed its capacity

- accessible only with running job

Where to store data?¶

You can use following directories for your data:

| Path (mounted at) | Quota | Purging | Protocol |

|---|---|---|---|

/home/username/ |

1 TB | 3 months after the end of the project | NFS |

Description

Personal home directory, you can check the path with echo $HOME command.

| Path (mounted at) | Quota | Purging | Protocol |

|---|---|---|---|

/projects/<project_id> |

unlimited | 3 months after the end of the project | NFS |

Description

Shared project directory for all project participants.

| Path (mounted at) | Quota | Purging | Protocol |

|---|---|---|---|

/scratch/<project_id> |

unlimited | 3 months after the end of the project | BeeGFS |

/work/$SLURM_JOB_ID |

unlimited | automatically after the job termination | XFS |

Description

Directories for temporary files created during calculations, accesible only through compute nodes during the running of the job.

/scratch/<project_id>- shared scratch directory accesible from all compute nodes/work/$SLURM_JOB_ID- local scratch directory, unique for selected compute node (see more below)

Where to run calculations¶

| Mountpoint | Capacity | Access nodes | Throughput (write/read) |

|---|---|---|---|

/home |

547 TB | Login and Compute/GPU | 3 GB/s & 6 GB/s |

/projects |

269 TB | Login and Compute/GPU | XXX |

/scratch |

269 TB | Login and Compute/GPU | 7 GB/s & 14 GB/s |

/work |

3.5 TB | Compute/GPU: n[001-048], n[141-148] | 3.6 GB/s & 6.7 GB/s |

/work |

1.5 TB | Compute: n[049-140] | 1.9 GB/s & 3.0 GB/s |

Filesystem choice

The choice of the most optimal FS for the given software is subject to many parameters and should be tested before starting large calculations. Generally speaking, /work should offer best performance for the systems where capacity is not an issue.

Quotas¶

Quotas are used to keep track of storage space that is being used on per-user or per-group basis. Quotas are measured both in terms of number of files used and total size of files. File sizes are measured in terms of the number of blocks used.

Determine quotas¶

Users can determine their quota usage by running the following command:

login01:~$ quota -s

User quota on /home

Disk quotas for user <user> (uid <user_id>):

Filesystem space quota limit grace files quota limit grace

store-nfs-ib:/storage/home

507G 1000G 1024G 2196k* 2048k 2098k

Command du -sh can be used to view the size of the respective folders:

login01:~$ du -sh /home/<user>/<dir>

Alternatively, users can use dust command, which prints out the tree structure of the directories, with the largest files in them.

Depth of the tree can be specified with -d parameter.

Output of dust command

login01:~$ dust /home/<user>/<dir>

640G ─┬ .

523G ├─┬ ADMIN

227G │ ├─┬ qe

226G │ │ └─┬ benchmarks-master

209G │ │ └─┬ GRIR443

59G │ │ ├── GRIR.wfc1

29G │ │ ├── GRIR.wfc2

14G │ │ └── GRIR.wfc4

128G │ ├─┬ cp2k

128G │ │ └─┬ FIST

69G │ │ ├── nodes

55G │ │ └── nodes_new

71G │ ├─┬ python

71G │ │ └─┬ miniconda3

36G │ │ ├── envs

34G │ │ └── pkgs

60G │ └── orca

68G ├─┬ MD

67G │ └── Softbark_wood

22G └─┬ Docking

16G └── COVID19

Exceeded quotas¶

If you exceed your storage quotas, you have several options:

-

Remove any unneeded files and directories from your directories.

-

Tar and compress files/directories from your directories. Since the write permissions are suspended, you can use your

/scratchsubdirectory to tar and zip the files, remove them from/home, and then copy the tar-zipped file back to/homeor store them locally:Verify the archive and delete original files:login01:~$ tar czvf /scratch/<user>/data_backup.tgz /home/<user>/<Directories-files-to-tar-and zip>Move data from scratch to avoid deletion:login01:~$ cd /scratch/<user> login01:~$ tar xzvf data_backup.tgz # Verify the content login01:~$ rm /home/<user>/<Directories-files-to-tar-and zip>

This procedure buys only so much space, thus should immediatelly follwed by downloading the data and storing them locally.login01:~$ cp /scratch/<user>/data_backup.tgz /projects/<user>/data_backup.tgz login01:~$ rm /scratch/<user>/data_backup.tgz -

Have the principal investigator (PI) justify the requirements for additional storage to be approved for the project.