ORCA

Description¶

ORCA is an ab initio quantum chemistry program package that contains modern electronic structure methods including density functional theory, many-body perturbation, coupled cluster, multireference methods, and semi-empirical quantum chemistry methods. Its main field of application is larger molecules, transition metal complexes, and their spectroscopic properties. ORCA is developed in the research group of Frank Neese. The free version is available only for academic use at academic institutions.

Versions¶

Following versions of ORCA package are currently available:

- Runtime dependencies:

- OpenMPI/4.1.4-GCC-12.2.0

You can load selected version (also with runtime dependencies) as a one module by following command:

module load ORCA/5.0.4-OpenMPI-4.1.4

User guide¶

You can find the software documentation and user guide here. To download the .pdf manual you should register at the community forum.

Benchmarking ORCA¶

In order to better understand how ORCA utilises the available hardware on Devana and how to get good performance we can examine the effect on benchmark performance of the choice of the number of MPI ranks per node.

Following command has been used to run the benchmarks:

/storage-apps/easybuild/software/ORCA/5.0.4-OpenMPI-4.1.4/orca ${benchmark}.inp > ${benchmark}.out

Info

Single-node benchmarks have been run on local /work/ storage native to each compute node, which are generally faster than shared storage hosting /home/ and /scratch/ directories.

Benchmarks have been made on following systems:

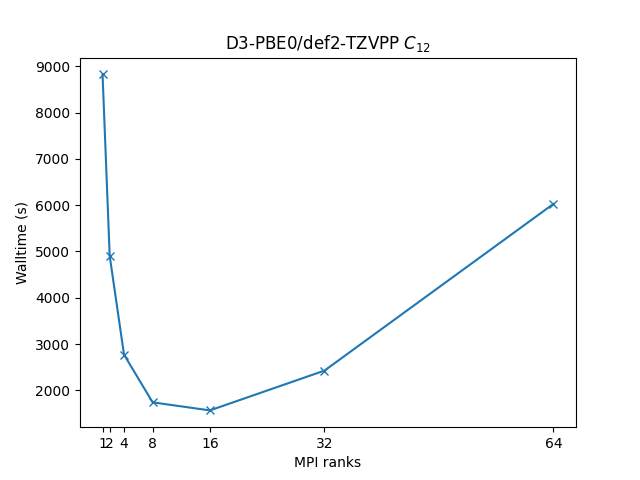

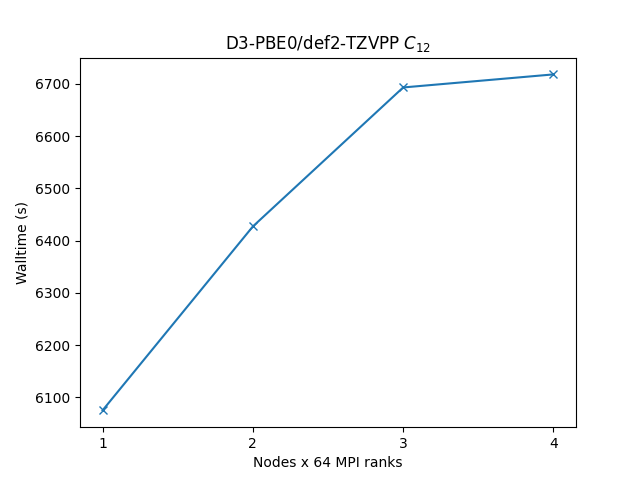

Geometry optimization of C12 benzene dimer D3-PBE0/def2-TZVPP level of theory illustrating the most time-consuming part of the calculation, i.e. SCF calculations.

| Single node Performance | Cross-node Performance |

|---|---|

|

|

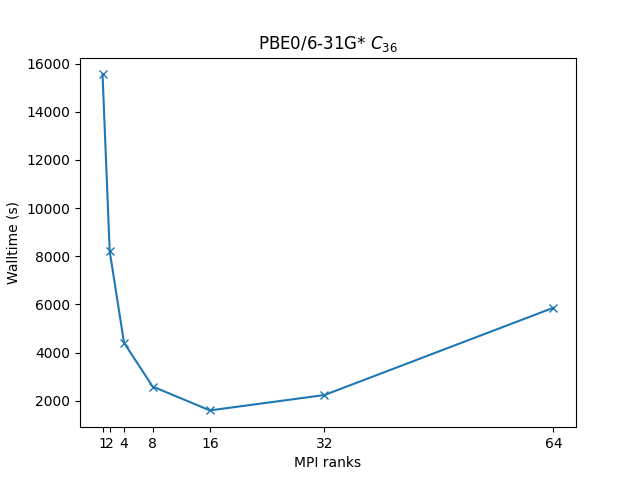

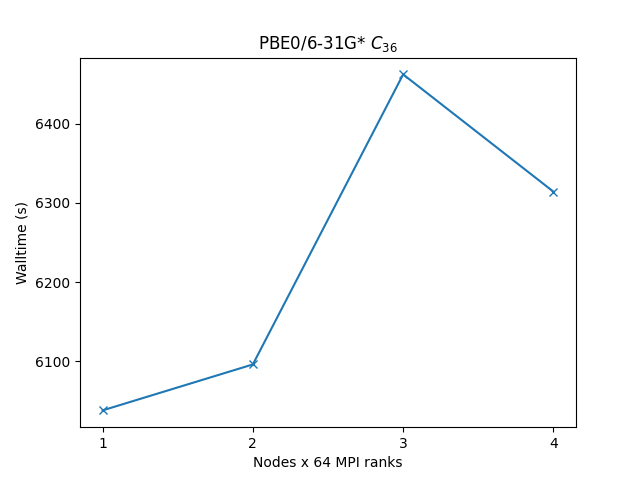

Geometry optimization of C36 fullerene at PBE0/6-31G* level of theory illustrating the most time-consuming part of the calculation, i.e. SCF calculations.

| Single node Performance | Cross-node Performance |

|---|---|

|

|

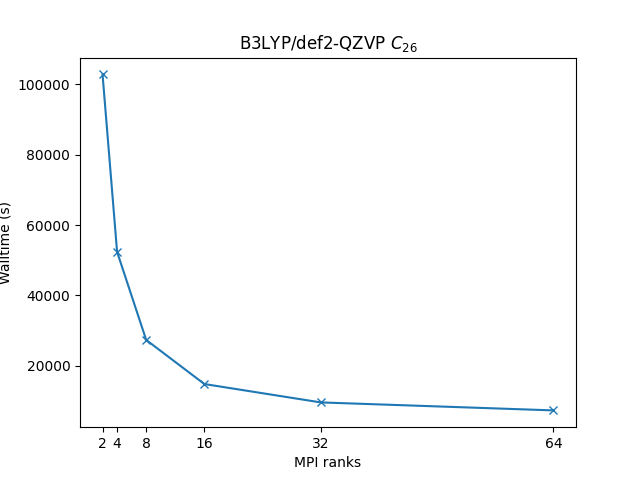

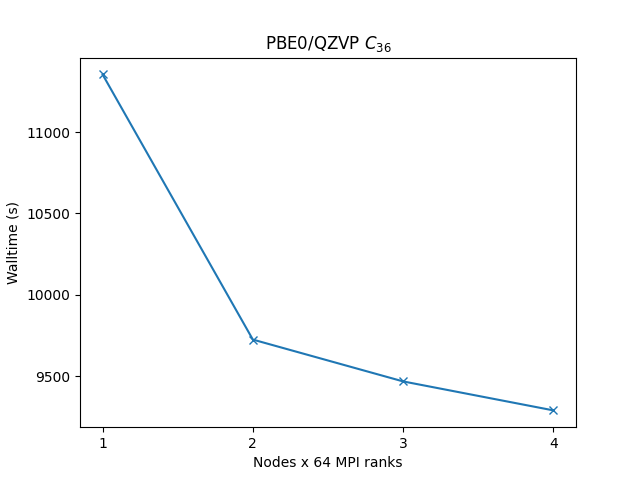

Geometry optimization of C36 fullerene at PBE0/def2-QZVP* level of theory illustrating the most time-consuming part of the calculation, i.e. SCF calculations.

| Single node Performance | Cross-node Performance |

|---|---|

|

|

Benchmarks reveal that calculation in ORCA does not scale with number of available cores. The number of MPI ranks required for the best performance is shifted based on the number of functions in the basis-set (see the best performance shift from TZVPP basis set to QZVP from 16 cores to 64 cores).

Example run script¶

Disks

For the best performance use local storage /work even when running cross-node calculations.

MPI ranks

In case of parallel run, use nprocs command instead of palN directive in the input file.

%pal

nprocs ${MPI ranks}

end

You can copy and modify this script to orca_run.sh and submit job to a compute node by command sbatch orca_run.sh.

#!/bin/bash

#SBATCH -J "orca_job" # name of job in SLURM

#SBATCH --account=<project> # project number

#SBATCH --partition= # selected partition (short, medium, long)

#SBATCH --ntasks= # number of mpi ranks (parallel run)

#SBATCH --time=hh:mm:ss # time limit for a job

#SBATCH -o stdout.%J.out # standard output

#SBATCH -e stderr.%J.out # error output

module load ORCA/5.0.4-OpenMPI-4.1.4

INIT_DIR=`pwd`

SCRATCH=/work/$SLURM_JOB_ID

mkdir $SCRATCH

INPUT=orca_input.inp

OUTPUT=orca_output.out

cp $INPUT $SCRATCH/$INPUT

cd $SCRATCH

# If you wish to run ORCA in parallel, you should use complete path to the binary.

/storage-apps/easybuild/software/ORCA/5.0.4-OpenMPI-4.1.4/orca $INPUT > $INIT_DIR/$OUTPUT